Quick Jump

*Note: all of these projects listed are in cronological order, so the begining projects are quite old. I chose to leave it this way because I'm still proud of them, and they show all of the progress that I've made over the years.

Project #1: Space Raiders - (Fall 2020)

- Where it all began: my first program

This is my first ever program. In my senior year of highschool, starting from knowing nothing about python and almost nothing about programming in general, I decided to learn how to code, and ended up making this in a single day. It's a simple game that I call Space Raiders where you have to avoid an asteroid that speeds up overtime, and the longer you survive, the higher the score you earn. Here, you can see in the video some real gameplay of the game!

Project #2: Platform Jumper - (Spring 2021)

- Video game round 2, improving on the last...

This is my second program that I have made, improving upon the last in both functionality and program complexity. It's another game just like the last, and the point of this game is to complete a level by making it from point A to B with out falling, or dying in some way. This game has much more complex features when compared to the last, here are a few:

- Collision Detection: dynamic hitbox algorithm that's used to calculate collisions for any arbitrary defined object allowing the creation of complex levels.

- Particle Animation Algorithm: Using the draw feature in the PyGame library, I implemented an algorithm that displayed a trailing particle effect where the particles had a decaying size relative to the random initial size, and time alive.

- infinitly Tiling Background: implemented a infinitely tiling background where the background tiles in order to create the illusion of movement while being able to keep the background completely filled in without breaking immersion.

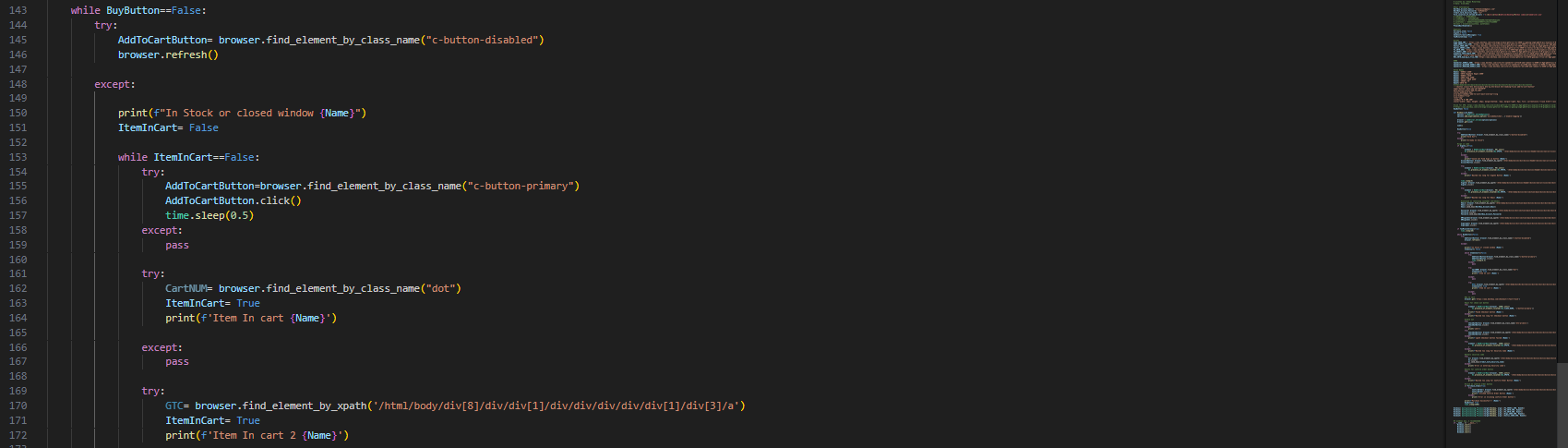

Project #3: GPU Web Scraper - (Spring/Summer 2021)

- Finding a modern GPU during a world-wide microchip shortage

After making two programs and building a custom PC, I needed a GPU for my PC to be able to further develop more ambitious projects in the future. However, this was during the peak of the 2021 world-wide chip shortage of the pandemic, where demand for such components were at a record high. So, I created a web scraper bot to autonomously monitor websites that sold the hardware component at MSRP. I learned a lot of valuable lessons about HTML5 along the way that eventually helped me to create this very website, but eventually after many close calls, the program succeeded twice securing two highly valuable GPU's (Radeon 6600xt & RTX 3060ti), where they were worth selling for twice that of which I paid.

Project #4: Wordle Bot - (Fall 2021 & Spring 2022)

- Destroying wordle with algorithms

This project is able to beat wordle with a perfect 100% accuracy. Using the ideas of information theory, the wordle algorithm goes through every possible guess calculating the number of average remaining words for each guess, and returns the words with the lowest average number of remaining words, or in other words, the words with the highest chance to eliminate the most remaining valid words. In simple terms, its essentually just an extremely optimized proccess of elimination algorithm.

All of the code for this project is available on my github Here.

Project #5: Neural Network From Scratch - (Summer 2023)

- Implementing a neural network library completely from scratch

This project is an implementation of a fully connected artificial neural network completely from scratch with the exception of the use of NumPy in order to speed up CPU-bound matrix calculations. Functionally, at its base level, it works the similarly as pytorch with the ability to define layers, and combine them together to create a network.

When you create a new network, it allows you to define the general structure of a neural network as a list of numbers where each element is a layer, and the value of each element is the number of neurons in that layer. This is the default functionality, however internally, it represents the network as a list of layer objects, so you can manually create a network like pytorch has it implemented. As for the training, again it has the same functionality as Pytorch, but there is a default function that can be used to handle all of the training with one function call. This again can alternatively also be done manually for more control.

All of the code for this project is available on my github Here.

Project #6: Visualizing AI Learning Algorithms - (Summer 2023)

- Implementing a visual representation of a neural network learning

This was somewhat of a continuation of my last project, in this project I generate a video representation of an AI learning an image, in the example above it's a simple smiley face, but it can be anything. The video is generated by sampling an image from the AI after every training step, then at the end of the training stringing the sampled images together to create a video. So you're literally watching an AI learn.

Originally, I had used my own code to create the neural network behind the AI, but due to the fact that it was CPU-bound proved to be much too slow, so after the original implementation, I opted to use PyTorch instead so that the Training could benefit from GPU powered acceleration via highly parallelized processing.

All of the code is available on my github Here.

Project #7: Game Engine from Scratch - (Fall 2023)

- Made a game engine from scratch in C++ using OpenGL

Made a Game engine from scratch in C++ using OpenGL for the GPU acceleration interface, and some simple linear algebra. The game engine supports Mac, Windows, and Linux; and features the ability to load in 3d models, a camera object, the ability to create and manipulate objects and object physics, and a very simple light engine.

Project #8: Self-Hosted LLM Server & LLM System Integration - (Summer 2024)

- Built a physical server to host a Large Language Model (LLM), and designed and implemented back-end architecture with an API and a user-friendly front-end web application interface.

Impressed by the release of ChatGPT, I decided to try out Large Language Models (LLM) for myself. I wanted it to be able to not just answer questions, but to also be able to do things that would be useful like storing long term memories to better serve the user, reading my documents, doing automated google searches, or doing complex calculations. I decided to go with using self hosted, open source LLMs to lower the cost to 0, and to prevent changes in the model for server-side closed source solutions like ChatGPT, which happens quite often. So I had to do three things to accomplish this, Build a server to host/run the LLM, develope the back-end framework for the LLM system integration, and finally, build a user-friendly front-end that is easy to use.

For the server, I decided to build it myself rather than buy a pre-built server to more optimally fit my needs, and to lower costs. I was able to get the majority of the less important parts for free, or dirt cheap off ebay. In the end, the only parts I paid for were the case, motherboard, and storage. More specifically hardware wise, to power the LLM, the server has a GTX 1080ti GPU. The server is running an Ubuntu server that I can easily SSH into, and I am using "Twingate", which is similar to a VPN, so I can access the server from anywhere.

As for the back-end, I decided to first design an API using Fast-API to interact with the LLM, so I can use the LLM, or the whole LLM system, for any other projects in the future. To do this, I mimicked the specifications

for the OpenAI API because this is the most widely used format for interfacing directly with LLMs, and then I can easily switch between different open source models, and different closed source models,

allowing for easy future model upgrades. Once I had this done, I decided on using Llamma 3 (8 billion parameters) since it is largely the most capable model at the moment, and was trained on by far the most data.

After designing the back-end, I gave the LLM the ability to use different "functions" which you can think of as actions, such as search. For example the search function gives the model the

ability to search for a query on google, local documents (from user), and the chat history using RAG. RAG, or Retrieval Augmented Generation takes a document (just a group of text), and splits it up into roughly paragraph sized chunks that are converted into 1024 dimensional vectors that maintain the same meaning as the text. Then once you have all the vectors,

you can simply do a vector similarity search using cosine similarity between the embedding vector, and an embedding vector of a search query. The vector with the highest similarity is returned as the result, and is used to guide the LLMs

final answer. The important thing about the implementation of these "functions" is that it is incredibly easy to implement any other function you want for full customization.

Here is a list of all of the default functions I have already implemented:

- Search - can search google, local documents, or long term chat history

- Calculator - can be used to calculate any expresion

- toggle audio - can toggle the state of the audio (TTS)

- Clear memory - can be used to clear the short term chat history memory (useful for unrelated conversations, and more concentrated responses)

- Send Log - Sends the current error log for debugging purposes

- List Functions - Display's all of the above functions, and a short description

Finally for the front-end, I started off by implementing Jarvis as a Discord chatbot, which worked well, but is limited for some features so I settled on making a custom web-app so I had full control over all of the features, and I can host it using the same server hosting the LLM interface API. I built the front-end using flask, and basic HTML, CSS, and JavaScript.